NLP Processing

NLTK

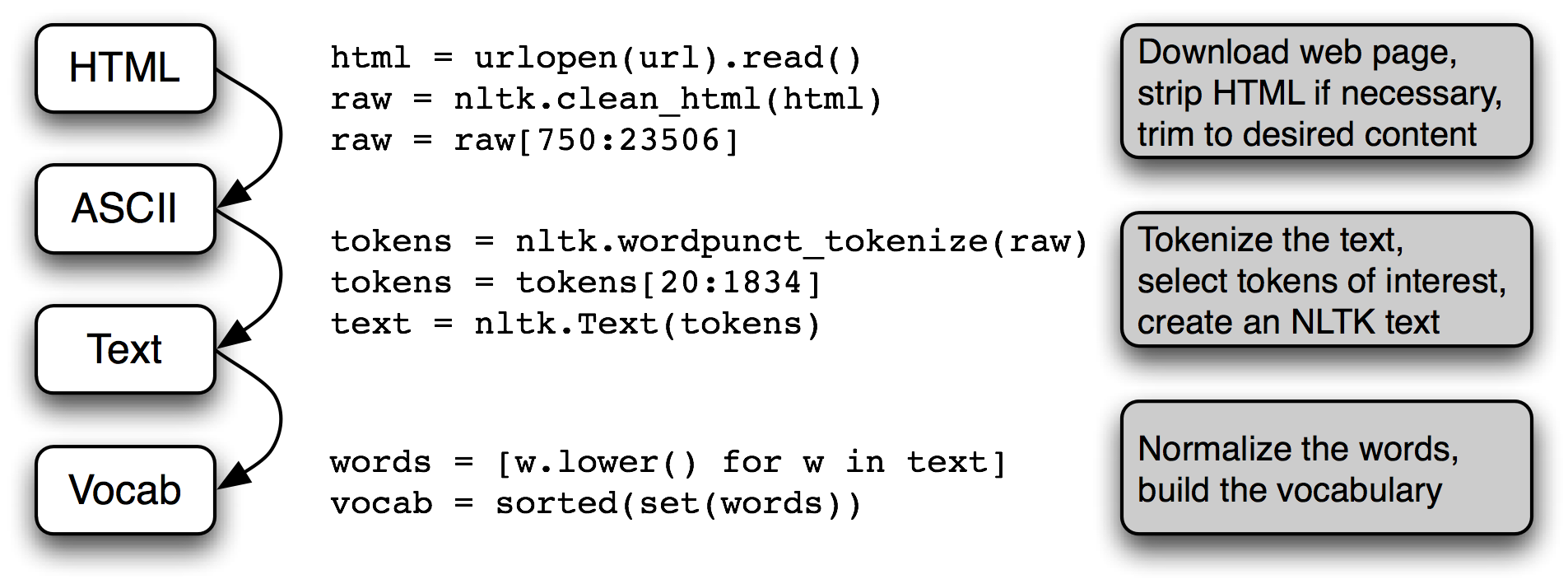

NLTK is a leading platform for building Python programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources such as WordNet, along with a suite of text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning, wrappers for industrial-strength NLP libraries, and an active discussion forum.

Beautiful Soup

Beautiful Soup is a Python library for extracting data from HTML and XML files. It works with your favorite parser to provide idiomatic ways of navigating, searching, and modifying the parse tree. It can save programmers hours or days of work.

Reading PDF Files

Stemming

- Porter Stemmer

- Lancaster Stemmer

Example:

from nltk.stem import PorterStemmer, LancasterStemmer

porter = PorterStemmer()

lancaster = LancasterStemmer()

print(porter.stem("running")) # Output: run

print(lancaster.stem("running")) # Output: run

Tokenization

import re

import nltk

raw = "This is a sample sentence, showing off the stop words filtration."

tokens = re.split(r'\s+', raw)

print(tokens)

# Using NLTK's regexp_tokenize

tokens = nltk.regexp_tokenize(raw, pattern=r'\w+')

print(tokens)

Sentence Segmentation

import nltk

import pprint

text = nltk.corpus.gutenberg.raw('chesterton-thursday.txt')

sents = nltk.sent_tokenize(text)

pprint.pprint(sents[79:89])

Additional Useful Libraries

- spaCy: Fast, production-ready NLP library with pre-trained models.

- TextBlob: Simple library for processing textual data.

- gensim: Topic modeling and document similarity analysis.