Useful Commands

Connect to replica set m103-repl, to secondary

mongo --port 27004 --authenticationDatabase admin -u m103-admin -p m103-pass

to primary

mongo --port 27001 --authenticationDatabase admin -u m103-admin -p m103-pass

rs.status()

rs.isMaster()

rs.print*

db.getMongo()

// can read from a secondary node

// We have to run rs.slaveOk() before we can read from secondary nodes.

rs.slaveOk()

// it works

show dbs

Keeping an odd number of nodes in a relica set. priority is the likelihood that a node becoming a primary during an election. default priority is 1. If we don’t want a node to be primary, set its priority to 0, it will become a passive node.

问题

在一个集群中,Primary来回自动改变,如何稳定得读写数据,写得时候不能指定固定IP?

What is Replication?

Replication is the concept of maintaining multiple copies of your data.

- Availability

- Replica Set

- Primary Node

- Secondary Node

- Fail Over

- Election/Vote

- Sync data

MongoDB Replica Set

Read more about the Simple Raft Protocol and the Raft Consensus Algorithm.

- Protocol V1

- oplog

- delayed nodes

- replica sets are groups of mongod

- HA and failover

- members can have different roles and specific purposes

The configuration file for the first node (node1.conf):

storage:

dbPath: /var/mongodb/db/node1

net:

bindIp: 192.168.103.100,localhost

port: 27011

security:

authorization: enabled

keyFile: /var/mongodb/pki/m103-keyfile

systemLog:

destination: file

path: /var/mongodb/db/node1/mongod.log

logAppend: true

processManagement:

fork: true

replication:

replSetName: m103-example

Creating the keyfile and setting permissions on it:

sudo mkdir -p /var/mongodb/pki/

sudo chown vagrant:vagrant /var/mongodb/pki/

openssl rand -base64 741 > /var/mongodb/pki/m103-keyfile

chmod 400 /var/mongodb/pki/m103-keyfile

mkdir -p /var/mongodb/db/node1

mongod -f node1.conf

cp node1.conf node2.conf

cp node2.conf node3.conf

vi node2.conf

node2.conf

storage:

dbPath: /var/mongodb/db/node2

net:

bindIp: 192.168.103.100,localhost

port: 27012

security:

keyFile: /var/mongodb/pki/m103-keyfile

systemLog:

destination: file

path: /var/mongodb/db/node2/mongod.log

logAppend: true

processManagement:

fork: true

replication:

replSetName: m103-example

node3.conf

storage:

dbPath: /var/mongodb/db/node3

net:

bindIp: 192.168.103.100,localhost

port: 27013

security:

keyFile: /var/mongodb/pki/m103-keyfile

systemLog:

destination: file

path: /var/mongodb/db/node3/mongod.log

logAppend: true

processManagement:

fork: true

replication:

replSetName: m103-example

mkdir /var/mongodb/db/{node2,node3}

mongod -f node2.conf

mongod -f node3.conf

mongo --port 27011

// initiating the replica set:

rs.initiate()

use admin

db.createUser({

user: "m103-admin",

pwd: "m103-pass",

roles: [

{role: "root", db: "admin"}

]

})

Exiting out of the Mongo shell and connecting to the entire replica set:

exit

mongo --host "m103-example/192.168.103.100:27011" -u "m103-admin" -p "m103-pass" --authenticationDatabase "admin"

rs.status()

rs.add("m103:27012")

rs.add("m103:27013")

rs.isMaster()

rs.stepDown()

rs.isMaster()

-

Enabling internal authentication in a replica set implicitly enables client authentication.

-

When connecting to a replica set, the mongo shell will redirect the connection to the primary node.

Replication creating lab reference

Replication Checklist

12 total, 12 passed, 0 skipped:

[PASS] "localhost:27001 is running"

[PASS] "localhost:27002 is running"

[PASS] "localhost:27003 is running"

[PASS] "Replication is enabled on localhost:27001"

[PASS] "Replication is enabled on localhost:27002"

[PASS] "Replication is enabled on localhost:27003"

[PASS] "Replica set 'm103-repl' has the correct name"

[PASS] "The replica set 'm103-repl' contains localhost:27001"

[PASS] "The replica set 'm103-repl' contains localhost:27002"

[PASS] "The replica set 'm103-repl' contains localhost:27003"

[PASS] "The replica set enforces client authentication"

[PASS] "The replica set m103-repl uses keyfile authentication"

Replication Configuration Document

Replication Commands

rs.status()

rs.isMaster()

db.serverStatus()['repl']

rs.printReplicationInfo()

Local DB: Part 1

Make a data directory and launch a mongod process for a standalone node:

mkdir allbymyselfdb

mongod --dbpath allbymyselfdb

Display all databases (by default, only admin and local):

mongo

show dbs

use local

db.oplog.rs.find()

var stats = db.oplog.rs.stats()

stats.capped

stats.size

stats.maxSize

rs.printReplicationInfo()

Local DB: Part 2

The local database will not be replicated.

Any data written to this database will not be replicated across the different nodes of the set.

The oplog.rs collection contains all operations that will be replicated.

The oplog.rs collection holds all the statements that get replicated across the different replica set members.

We cap the oplog.rs collection instead of dropping it entirely.

Reconfiguring a Running Replica Set(while it’s still running)

Configuring a node to non-voting and hidden.

node4.conf:

storage:

dbPath: /var/mongodb/db/node4

net:

bindIp: 192.168.103.100,localhost

port: 27014

systemLog:

destination: file

path: /var/mongodb/db/node4/mongod.log

logAppend: true

processManagement:

fork: true

replication:

replSetName: m103-example

arbiter.conf:

storage:

dbPath: /var/mongodb/db/arbiter

net:

bindIp: 192.168.103.100,localhost

port: 28000

systemLog:

destination: file

path: /var/mongodb/db/arbiter/mongod.log

logAppend: true

processManagement:

fork: true

replication:

replSetName: m103-example

COPY

Starting up mongod processes for our fourth node and arbiter:

mongod -f node4.conf

mongod -f arbiter.conf

From the Mongo shell of the replica set, adding the new secondary and the new arbiter:

rs.add("m103:27014")

rs.addArb("m103:28000")

Checking replica set makeup after adding two new nodes:

rs.isMaster()

Removing the arbiter from our replica set:

rs.remove("m103:28000")

Assigning the current configuration to a shell variable we can edit, in order to reconfigure the replica set:

cfg = rs.conf()

Editing our new variable cfg to change topology - specifically, by modifying cfg.members:

cfg.members[3].votes = 0

cfg.members[3].hidden = true

cfg.members[3].priority = 0

Updating our replica set to use the new configuration cfg:

rs.reconfig(cfg)

Which of the following is true about reconfiguring a replica set with rs.reconfig()?

It does not require any of the nodes to restarted.

When we reconfigure a replica set with rs.reconfig(), we do not need to restart any of the individual nodes.

It does not require any of the configuration files to be updated.

When we reconfigure a replica set with rs.reconfig(), we do not need to update any of the nodes’ configuration files.

db.createUser({user:"test", pwd:"123456", roles:[{role:"dbOwner", db:"test"}]});

to see what the current host is?

m103-example:SECONDARY> db.getMongo()

connection to 127.0.0.1:27018

By default, mongodb reads and writes data from the master node, and the replica node is not allowed to read. Set the replica node to read.

// first connect to the secondary node

db.setSlaveOk()

Important settings

// 表示本节点成为主节点的优先级,0 means it will never be primary.

"priority" : 0

// 将备份节点设为隐藏,客户端就无法将请求发给它了

"hidden": true

// votes given to other nodes,0表示没有投票权, nonvoting

"votes": 0

Lab

Connect to replica set m103-repl

mongo --port 27001 --authenticationDatabase admin -u m103-admin -p m103-pass

Assigning the current configuration to a shell variable we can edit, in order to reconfigure the replica set:

cfg = rs.conf()

Editing our new variable cfg to change topology - specifically, by modifying cfg.members:

cfg.members[3].votes = 0

cfg.members[3].hidden = true

cfg.members[3].priority = 0

Updating our replica set to use the new configuration cfg:

reconfig can only be used on primary node.

rs.reconfig(cfg)

Reads and Writes on a Replica Set

We have to run rs.slaveOk() before we can read from secondary nodes.

We can not write to a secondary node.

Failover and Elections

Keeping an odd number of nodes in a relica set. priority is the likelihood that a node becoming a primary during an election. default priority is 1. If we don’t want a node to be primary, set its priority to 0, it will become a passive node.

Nodes with priority 0 cannot be elected primary.Nodes with higher priority are more likely to be elected primary.

When a majority of nodes are unavailable, all node will become secondary, the client can not read or write data.

Storing replica set configuration as a variable cfg:

cfg = rs.conf()

Setting the priority of a node to 0, so it cannot become primary (making the node “passive”):

cfg.members[2].priority = 0

Updating our replica set to use the new configuration cfg:

rs.reconfig(cfg)

Checking the new topology of our set:

rs.isMaster()

Forcing an election in this replica set (although in this case, we rigged the election so only one node could become primary):

rs.stepDown()

Checking the topology of our set after the election:

rs.isMaster()

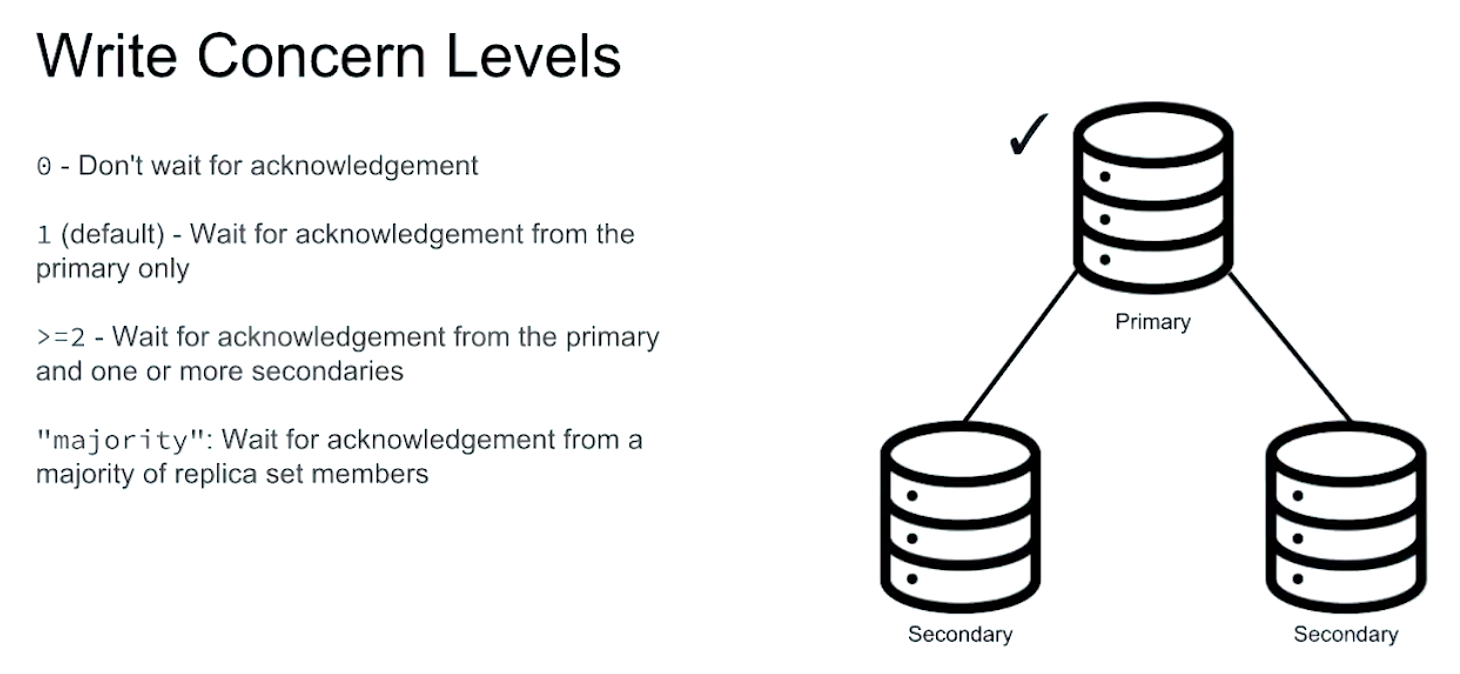

Write Concerns: Part 1

durability 耐久性

Lab

When a writeConcernError occurs, the document is still written to the healthy nodes.

This is correct.

The WriteResult object simply tells us whether the writeConcern was successful or not - it will not undo successful writes from any of the nodes.

The unhealthy node will have the inserted document when it is brought back online.

This is correct.

When the unhealthy node comes back online, it rejoins the replica set and its oplog is compared with the other nodes’ oplogs. Any missing operations will be replayed on the newly healthy node.

w: “majority” would also cause this write operation to return with an error.

w: “majority” requests acknowledgement that a majority of nodes in a replica set have registered the write. In a three-node replica set, only two nodes are required for a majority, so the two healthy nodes are sufficient to satisfy this writeConcern. The write operation will always return with an error, even if wtimeout is not specified.

If wtimeout is not specified, the write operation will be retried for an indefinite amount of time until the writeConcern is successful. If the writeConcern is impossible, like in this example, it may never return anything to the client.

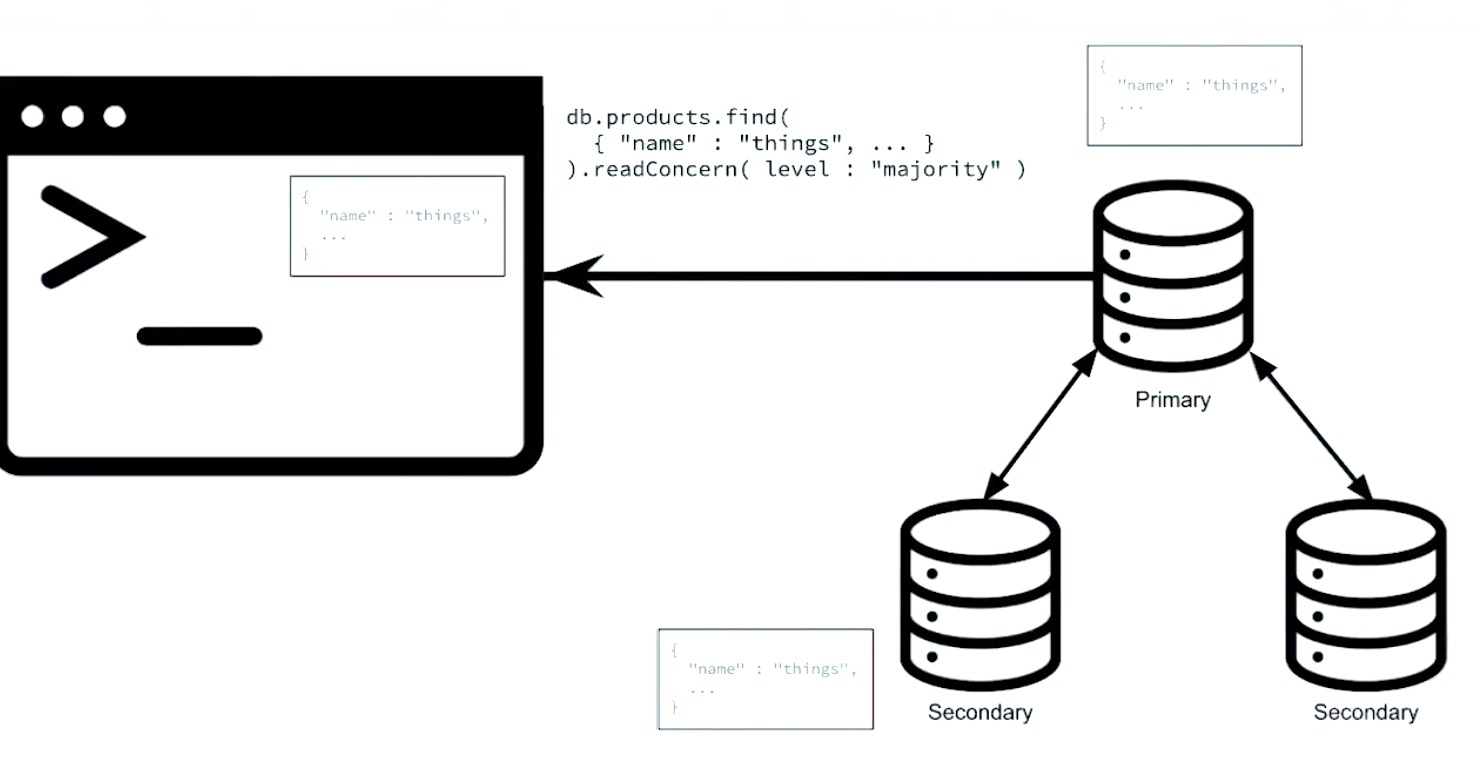

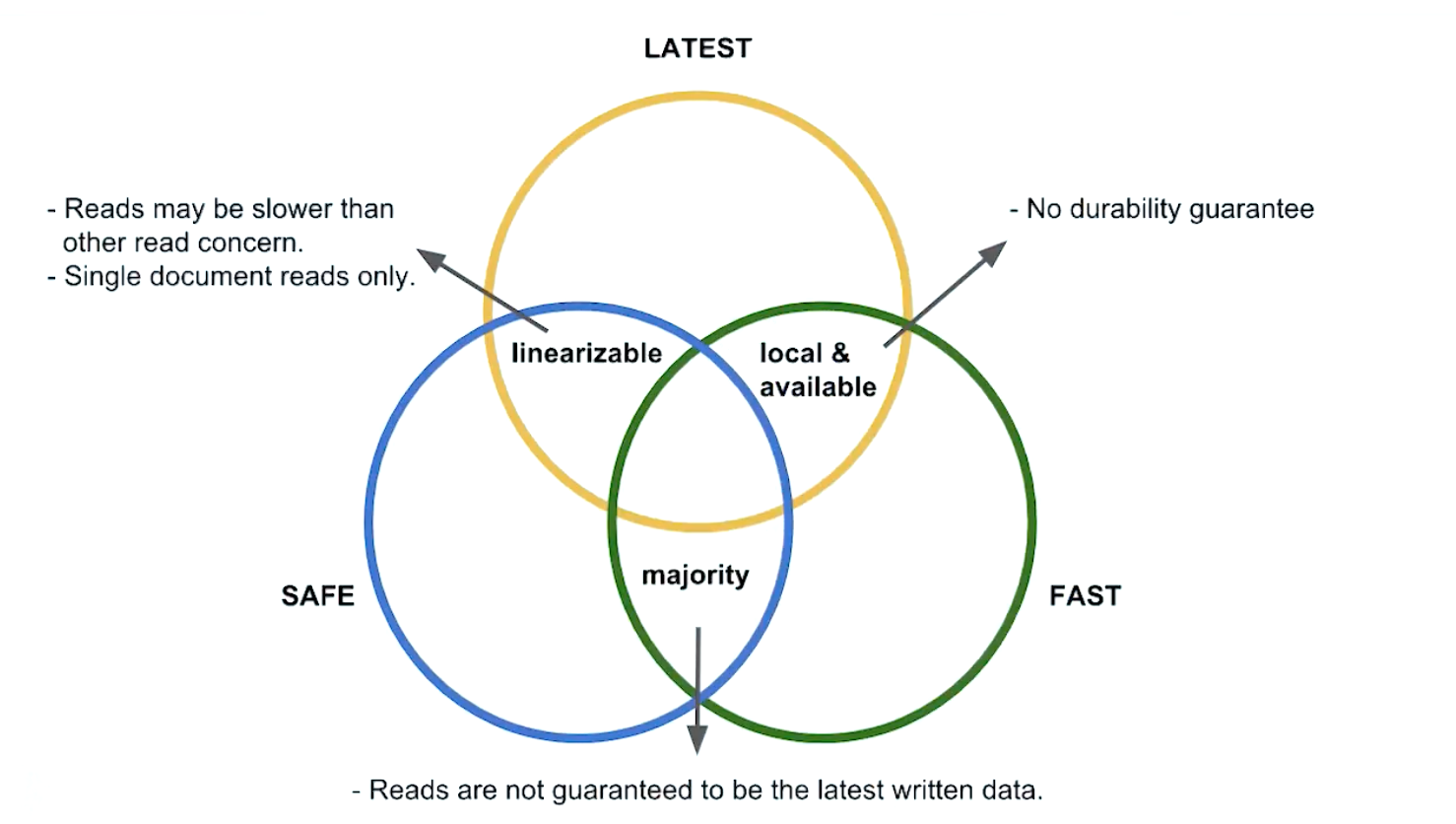

Read Concerns

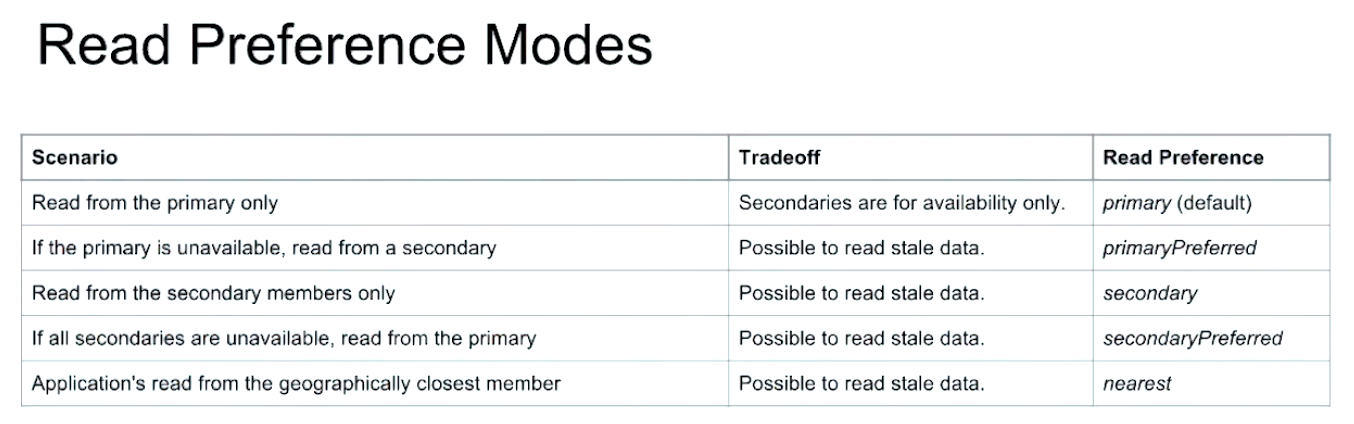

Read Preferences

Read preference allows your applications to route read operations to specific members of a replica set.

- primary(default)

- primaryPreferred

- secondary

- secondaryPreferred

- nearest(minimum latency/geographic)

Lab: Read Preferences

Problem:

Consider a 3-node replica set that experiences a network outage.

Two of the three nodes were unreachable during the outage, leaving one node remaining.

Which of these readPreferences will allow you to read data from this node?

The key concept to understand here is that when two nodes go down in a three-node replica set, the third node becomes a secondary regardless of whether it started as a primary.

Therefore, connecting to the third node is the same as connecting to a secondary node, and any readPreference will work except for primary, which requires all operations to read from the primary node.